How to Create OpenAI-Compatible API Endpoints for Multiple n8n Workflows

curled from Dele Odufuye’s post on Medium

At Lucidus Fortis, we faced a challenge: how do we let our entire organization chat with multiple n8n agents through platforms like OpenWebUI, Microsoft Teams, Zoho Cliq, and Slack without creating separate pipelines for each workflow?

We found a solution that transforms n8n into an OpenAI-compatible API server, allowing you to access multiple workflows as selectable models through a single integration.

The Problem We Solved

Previously, we used a pipeline from Owndev to call n8n agents from inside OpenWebUI. This worked well, but you had to implement a new pipeline for each agent you wanted to connect.

When we integrated Teams, Cliq, and Slack directly to OpenWebUI using its OpenAI-compatible endpoints, it worked perfectly well. However, connecting through OpenWebUI definitely isn’t the best approach.

We needed a better way to connect directly to n8n and access multiple workflows as if they were different AI models.

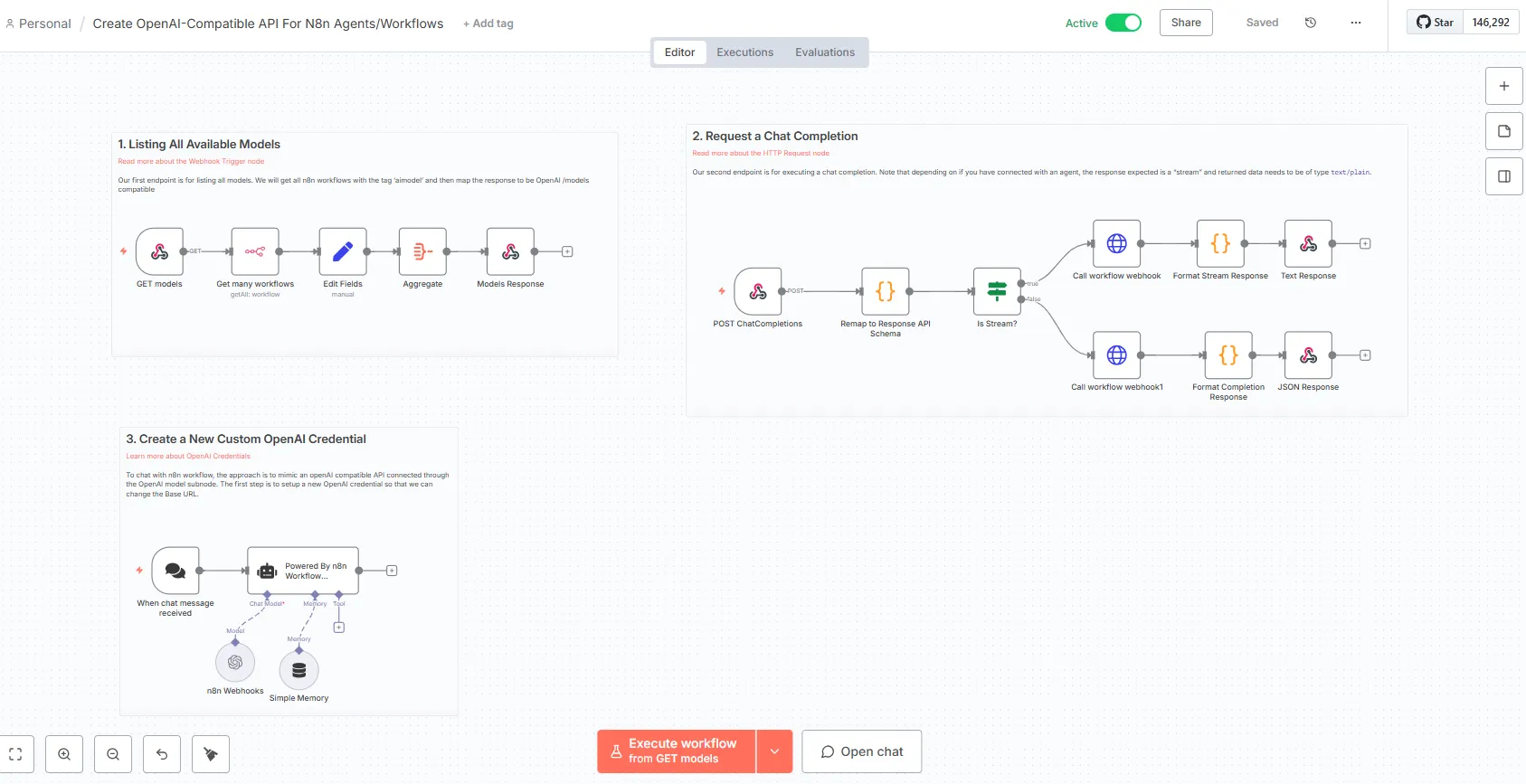

The Solution: Two Webhook Endpoints

Our solution creates two webhook endpoints that mimic the OpenAI API structure:

1. `/models` endpoint — Lists all available n8n workflows tagged as AI models

2. `/chat/completions` endpoint — Executes chat completions with selected workflows

This approach was inspired by Jimleuk’s workflow on n8n Templates.

How It Works

The /models Endpoint

This webhook searches for all n8n workflows tagged with `aimodel` and returns them in OpenAI’s model list format.

When a client requests the models endpoint, the workflow:

1. Filters workflows by the `aimodel` tag

2. Formats the response to match OpenAI’s API structure

3. Returns a list of available workflows as selectable models

Your chat application now sees each tagged workflow as a distinct model you choose from a dropdown.

The /chat/completions Endpoint

This webhook handles the actual chat interactions.

When you send a chat message, the workflow:

1. Receives the OpenAI-formatted request

2. Identifies which n8n workflow to call based on the model parameter

3. Calls the corresponding workflow via HTTP node

4. Returns an LLM-structured response (text or stream format)

5. Supports both regular text responses and streaming for agent-based workflows

The response format matches OpenAI’s structure, making it compatible with any application that supports OpenAI’s API.

Key Benefits

Multiple Workflows, Single Integration: Access all your n8n agents through one API endpoint instead of creating separate pipelines for each.

Universal Compatibility: Works with any platform that supports OpenAI-compatible APIs, including OpenWebUI, Microsoft Teams, Zoho Cliq, and Slack.

Easy Workflow Management: Add new workflows by simply tagging them with `aimodel`. No code changes required.

Streaming Support: Handles both standard responses and streaming for real-time agent interactions.

Team Accessibility: Everyone in your organization talks to n8n agents through their preferred communication platform.

Implementation Steps

Step 1: Create the /models Webhook

Set up a webhook node with the path `/models`. Add logic to filter workflows with the `aimodel` tag and format the response:

{

"object": "list",

"data": [

{

"id": "workflow-name",

"object": "model",

"created": 1234567890,

"owned_by": "n8n"

}

]

}

Step 2: Create the /chat/completions Webhook

Set up a webhook node with the path `/chat/completions`. Configure it to:

– Accept POST requests with OpenAI-formatted messages

– Extract the model parameter to identify which workflow to call

– Use an HTTP Request node to call the target workflow

– Return responses in OpenAI’s completion format

Do not forget to secure your webhooks with header authentication

Step 3: Tag Your Workflows

Add aimodel (or your own choice tag) to workflows

Add the `aimodel` tag (or your own choice tag) to any workflow with a webhook that you want accessible through the API.

Step 4: Configure Your OpenAI Credential

Create a new OpenAI credential in n8n and change the Base URL to point to your n8n webhook endpoints. Learn more about OpenAI Credentials .

Step 5: Connect Your Applications

Point your chat applications (OpenWebUI, Teams, Cliq, Slack) to your n8n webhook URL as if it were an OpenAI API endpoint.

Real-World Use Case

At Lucidus Fortis, our team members now:

– Select from multiple specialized agents directly in Teams or Cliq

– Switch between customer support, data analysis, and research workflows without changing platforms

– Add new workflows by tagging them, instantly making them available organization-wide

Get Started

Download the complete n8n workflow JSON file from N8N template directory https://n8n.io/workflows/9438-create-universal-openai-compatible-api-endpoints-for-multiple-ai-workflows/ or from the GitHub repository: https://github.com/tsaboin/N8n-Worklows

Import the workflow into your n8n instance and start connecting your workflows to any OpenAI-compatible application.

This solution eliminates the need for multiple pipelines and gives you the flexibility to manage all your n8n agents through a single, standardized API interface.